Rightsizing Usability Testing

Test design, delivery, and cadence depends not only on the questions the team wants to ask, but also the priorities and ways of working we’ve established. Scientific rigor is weighed with business goals to define acceptable risk. A researcher can own the insights or facilitate team ownership and synthesis. Here are some approaches I’ve taken to align with goals and expectations across the product team.

Robust Summative Testing

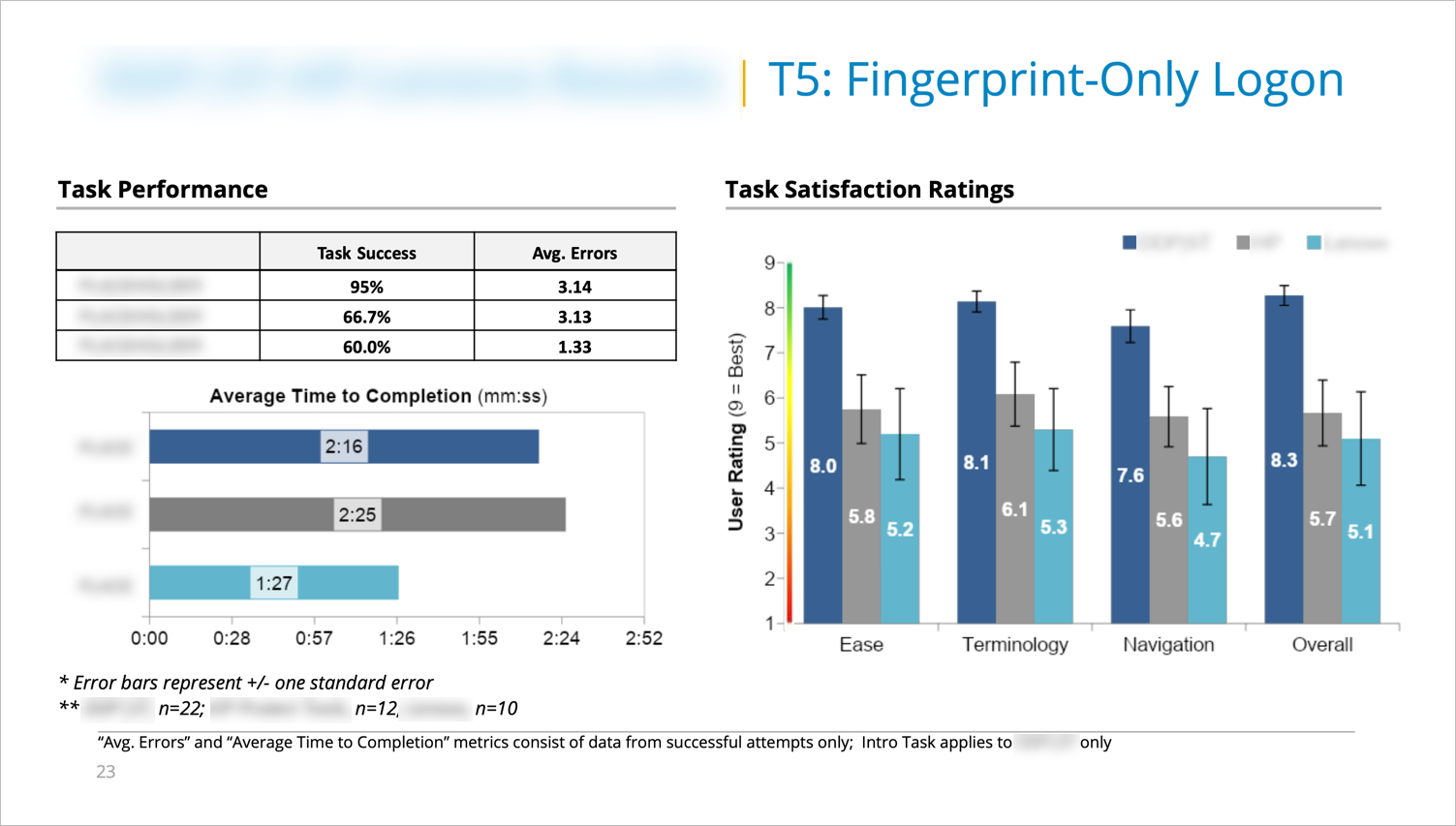

Dell Data Protection is a suite of enterprise security management tools. The program team needed to assess the usability of Dell’s local security management offering, Security Tools (DDP | ST), and understand where it sits in the competitive landscape.

I designed and conducted a competitive benchmark for installing and using industry-leading security offerings. I researched primary competitors, procured two competing systems to test, and ensured valid, statistically significant test data with a within-subjects design and counterbalanced tasks. I led the study, but shared moderating across two comparative rounds with a contract usability engineer who I supervised. I owned the meta analysis and reporting across both.

Impact

- Established performance benchmarks including task success, time on task, average non-critical errors, SUS-based ratings, and NPS scores

- The assessment directly informed new capabilities and helped establish differentiators that moved DDP|ST from being regarded as bloatware to a best-in-class security offering

Faster and Leaner Iterative Testing

OpenStax prioritizes usability testing all new capabilities and changes. However, like with all Agile shops, testing needs to be lean, fast, and flexible, and testers need to be resourceful.

As UX research lead I wanted to establish a usability testing protocol at OpenStax to improve consistency, effectiveness, and turnaround times in a sprint-based environment.

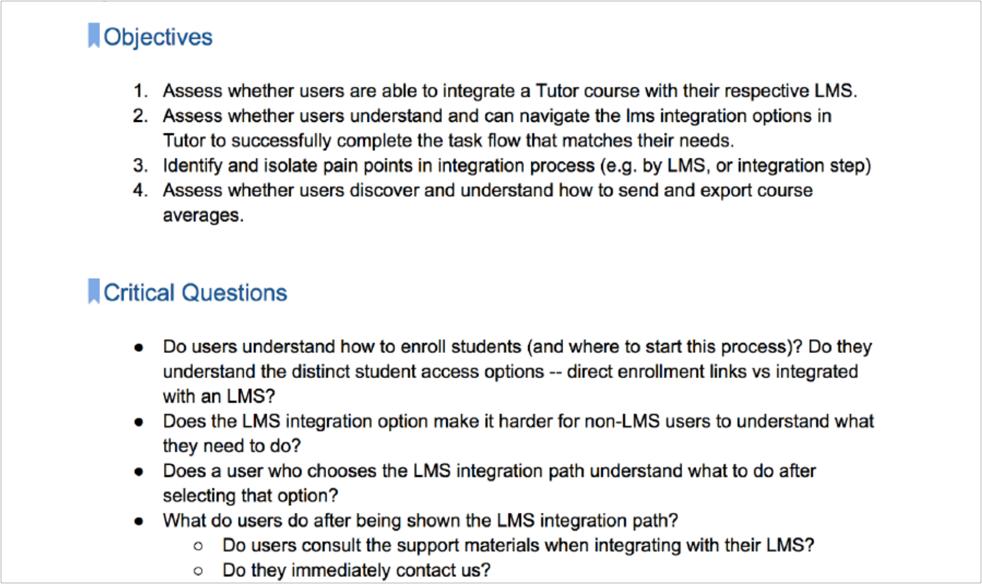

I ensured all test plans, no matter how lean, start with hypotheses and/or objectives and critical research questions. That leads to a script with explicit language and “stage directions” to ensure an easy handoff to multiple facilitators.

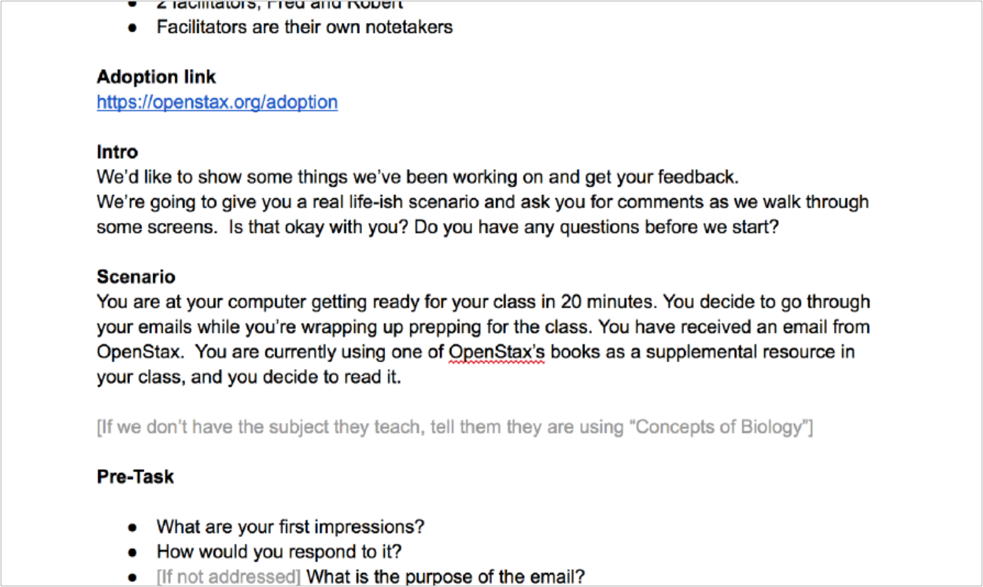

I always built around scenarios that captured real world contexts and placed features within larger task flows. For example, when analytics told us users were abandoning our book adoption form, I was asked to test the form’s micro-interactions. I built a scenario, however, that started from the form’s touchpoint, a marketing email. We found users didn’t expect to be led to a form, but rather, to more information on book adoptions. We then revisited content and CTAs to more clearly telegraph the form, significantly reducing dropoffs.

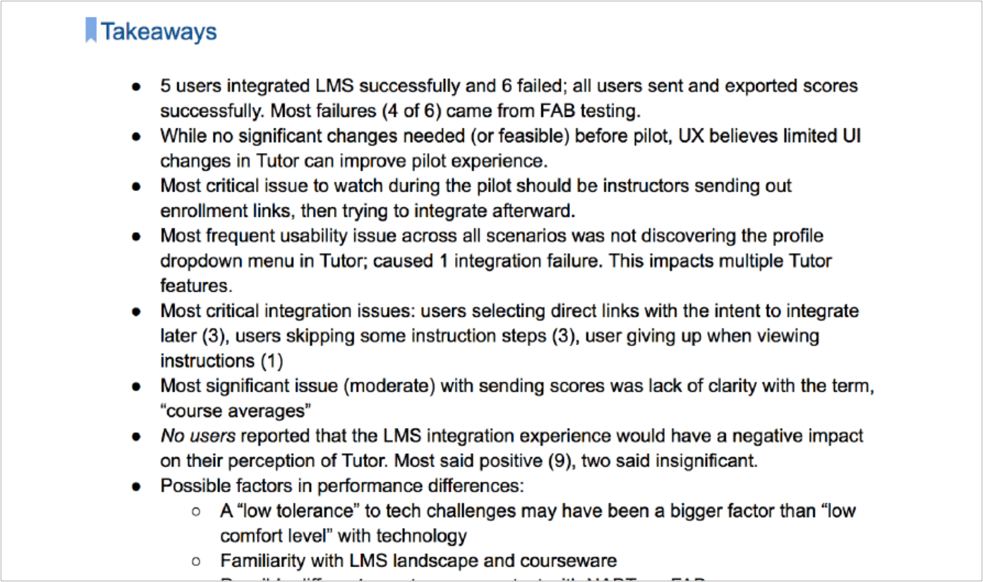

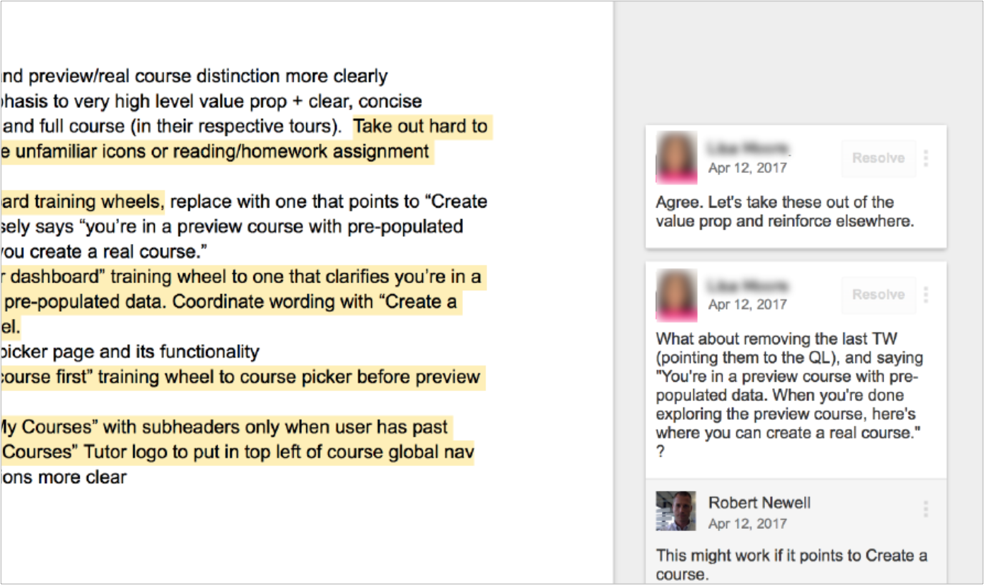

We stayed flexible with test plans, sometimes performing rapid iterations between test sessions based on early findings (RITE testing). Findings reports were no frills Google Docs that led with takeaways and recommendations, followed by a detailed summary and itemized usability issues.

Since I worked closely with the product manager, I didn’t need illustrations or complicated slide decks to communicate findings. So I kept documentation in Google Docs. This also allowed for easy linking between user stories and video clips. And it encouraged discussion and decisions by stakeholders directly in the report.

Finally, I created test plan and findings report templates to speed up planning for other team members and ensure a consistent protocol across tests.

Impact

- Faster and more frequent iterations let to better experiences — When OpenStax Tutor Beta was launched in Fall 2017, customer support saw a 20% drop in support requests

- Established 3-6 hour turnaround times for findings reports after most testing

- Templates and explicit test plans ensured reliability across facilitators and tests

- Established rapid iterative prototyping (RITE testing) within tests

- Enabled remote and live testing with multiple facilitators beyond the UX team

Team Insights in a 1-Week 4-Day Design Sprint

Pearson moved to 1-week design sprints to accelerate iteration and handoffs to development. While the designer led the ideation and I led the testing, we all moved through the sprint activities as a group. We collaborated remotely through several Mural boards.

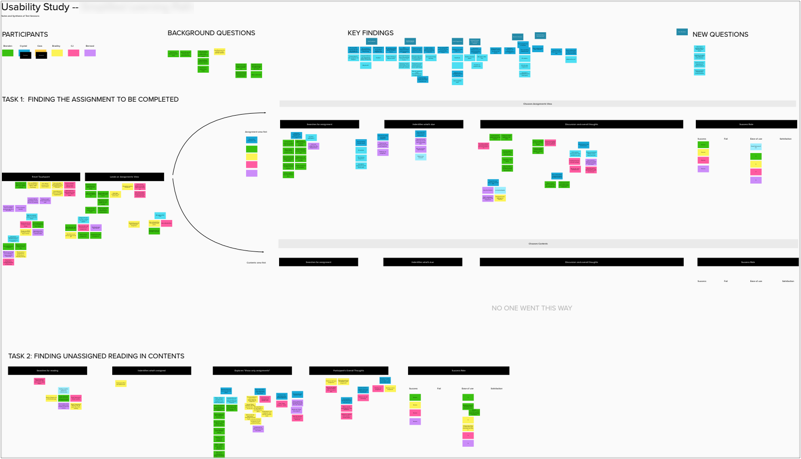

I built the test plan in one afternoon from a rough storyboard in parallel with the UXD as he built the prototype. The next morning I moderated all the test sessions while the team took notes in a Mural template I created.

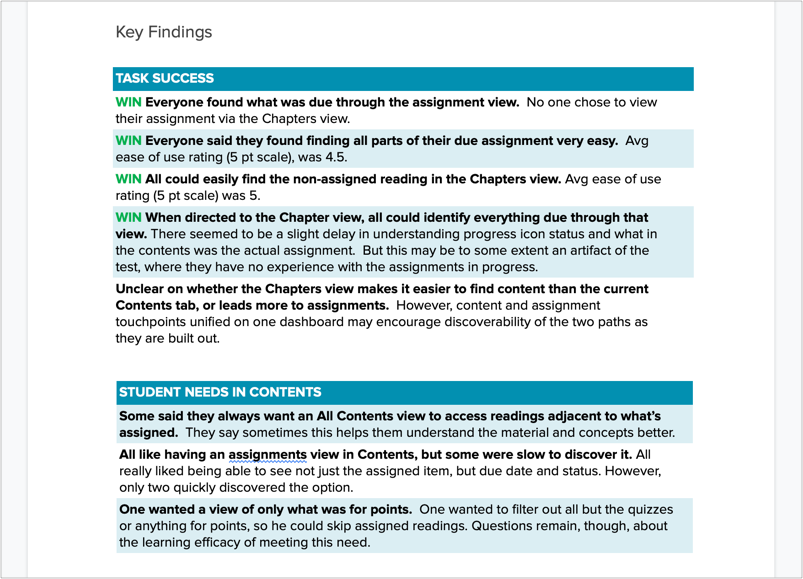

I led the synthesis with the group that afternoon. We documented success rates and ease ratings and clustered qualitative observations into themes. Rather than own the insights as an analyst, I shared that job with the entire team as a facilitator. We pulled out key findings and highlighted them on the Mural board. I documented them later in a simply formatted Word doc for easier reading and access by non-participating stakeholders

Impact

- Problem to validated solution in four days with visual design handoff to development within a sprint– sometimes 2X within a sprint; This increased velocity and moved our process further away from waterfall.

- Moved to testing all in one morning with synthesis in an hour that afternoon

- The team owned and shared the insights, improving alignment and requiring less documentation

- Broke down silos by bringing stakeholders across departments together to ideate and validate as one team for the first time